AI & African Creativity: Who Keeps the Copyright?

Tools have always been extensions of human imagination - brushes, cameras, synthesisers - and now, generative AI joins that lineage. For artists, marketers, and technologists across Africa, the question is not whether the tool will exist, but how it will be governed so it enlarges opportunity instead of narrowing it. This piece maps the practical landscape: where public investment has begun to build foundational models, where brands use GenAI for high‑tempo engagement, and how musicians and filmmakers must steward authenticity, rights and value so that communities, not outsiders, reap the benefits.

How AI Can Lift African Artists — Without Taking Their Voice

What we know from the field

Three clear patterns emerge from recent case studies:

(1) Public investment and open models (Nigeria’s NCAIR initiatives and the NCAIR1 entry on HuggingFace) create capability and signal national priority

(2) Brands and distributors use GenAI early and cheaply for promotion and fan engagement (DSTV/GOtv matchday banter), while production adoption in sectors like Nollywood remains cautious and distribution-first.

(3) Private startups are building culturally focused products (KushLingo’s language and cultural learning platform). At the same time, there are consistent gaps: a shortage of public, consented local datasets; unclear commercialization and IP pathways; and limited, visible outcome metrics tying funding to jobs or revenue.

These patterns shape practical tradeoffs. Public models lower technical barriers; promotional use-cases show immediate ROI in reach and speed; production-scale adoption requires localized data, IP clarity, and economics that protect creators.

Key technical and policy gaps to close

- Dataset provenance and localization: Open models are useful, but few consented, local datasets are publicly available, undermining cultural authenticity and bias mitigation.

- Transparent model origin: Model cards, training code, and licensing are essential to auditability and responsible reuse.

- Commercial pathways: Training and startup grants are necessary but insufficient without procurement pilots, follow‑on capital, and market access to prevent talent drain.

- Governance and redress: Ethics commitments must be operationalized through audits, complaint mechanisms, and visible KPIs.

From Matchday Memes to Movie Drops: AI That Empowers Creatives

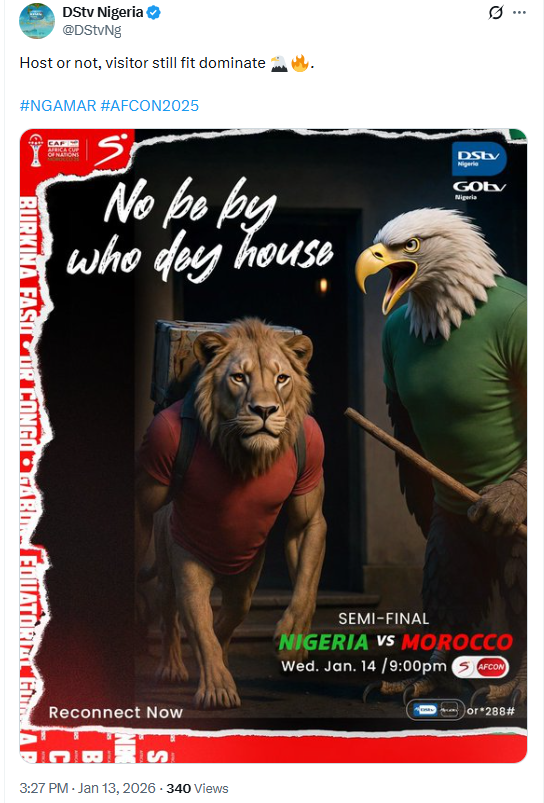

Case: GenAI as high‑tempo engagement — DSTV/GOtv matchday banter

The DSTV/GOtv example shows how regional media handles use AI‑generated images to spark cross‑market banter around football spectacles, including during African Cup of Nations (AFCON) matches. The tactic’s strengths are speed, low cost, and local relevance when paired with human editorial judgment. Shortcomings are predictable: lack of disclosure, risk of misrepresenting likenesses, and cultural missteps if not human‑reviewed.

Operational blueprint for brands (technical + editorial)

- Brief & Tone Guardrails: Define acceptable humor bounds, prohibited content (deepfakes of players, slurs), and escalation thresholds.

- Model & Provenance: Use audited models with accessible model cards; log which model and prompt generated each asset.

- Prompt & Generation Pipeline: Implement a generation pipeline that produces N variants, tags each with metadata (model version, seed, prompt hash), and stores the variants in a content repository.

- Human Review & Cultural Sign‑Off: Route assets through local cultural reviewers before publishing; keep review audit trails.

- Disclosure & Attribution: Add clear captioning or hashtags indicating AI generation and the model when appropriate (improves trust and auditability).

- Post‑Mortem & KPIs: Capture impressions, engagement rate, sentiment ratio, follower lift and conversion to offers/subscriptions; feed learnings back into prompt designs and guardrails.

System architecture sketch for safe, repeatable creative flows

A minimal MLOps + CMS integration for social creatives:

- Continuous Integration (CI): Prompt repository with version control (Git-like) and prompt templates per campaign.

- Generation service: Containerized inference nodes (GPU or hosted API) that accept prompt templates and return N variants with cryptographic hashes.

- Metadata and provenance store: A small database recording model version, prompt text (or prompt hash for IP reasons), timestamp, reviewer ID, and sign-off logs.

- Editorial queue in CMS: Human reviewers access generated variants alongside provenance metadata; approved assets push to social scheduler with a disclosure flag.

- Analytics and attribution: UTM-enabled scheduling plus sentiment analysis to measure performance and flag escalations.

Nollywood, Indian Cinema and Distribution-First Strategies

Why Nollywood is choosing distribution over production AI, and how to leverage that choice

Where Indian cinema shows fast production-side AI adoption, Nollywood’s visible shift is toward open, incentive-driven distribution (YouTube, social platforms). That is a pragmatic approach. Incentive-driven platforms deliver reach and near-term monetisation without the tech risk and dataset needs of production‑scale AI. But reach alone doesn't guarantee creator prosperity.

Actions for film industry leaders and CTOs

- Start small with production augmentation: Pilot AI for subtitling, dubbing, pre-visualization and promo assets before experimenting with synthetic likenesses or voice cloning.

- Data commons for dialogue and music motifs: Create consented corpora from festival screenings, writer archives and licensed dialogues to fine-tune models for local idiom and cadence.

- Procurement pilots: Commit to public-sector or broadcaster procurement with quota for local AI-enabled vendors to create predictable revenue paths for startups.

- Legal templates: Develop standard actor and writer consent clauses covering synthetic use (voice, likeness), royalty splits and benefit-sharing where public funds underpin training data.

AI & African Creativity: Empowerment or Extraction?

A songwriter remembers the first time a beat opened a door, not a door that closed behind them, but one that widened a street for neighbors to sell their wares, for cousins to learn a chorus, for an elder’s story to be sung again. Technology has always been a tool of opening and narrowing; the question before us is whether the next wave of tools will lift more hands to play, or quietly take the instrument. And this can serve as a wake up call for leaders who must steward that choice: marketers who commission work, CTOs who pick models, founders who design business models, and policymakers who decide who benefits. We look at concrete examples (e.g. AI‑generated music, and language apps) and translate them into technical guardrails and practical actions so AI empowers African creativity rather than replacing it.

AI and Afrobeat: Opportunity, Risk, and a Framework to Protect Cultural Soul

How AI can expand access and skills

Generative tools lower entry costs for beat-making, sampling, and arrangement. Talent without studio budgets can iterate faster, learn production techniques, and prototype marketable songs. That can reduce friction into professional pathways and, if connected to monetisation mechanisms, reduce unemployment in the creative economy.

Risks that strip value from artists

- Homogenization: Overreliance on the same models and sample pools risks making new tracks sound similar, eroding distinctiveness.

- Copyright & voice cloning: Synthetic recreations of established artists or uncleared samples expose creators and platforms to legal action and moral harms.

- Value leakage: Open models trained on local works without benefit-sharing can channel commercial value abroad.

A short framework for music: Assist structure, preserve soul

- Clear training provenance: Do not train on copyrighted music unless licensed; publish dataset manifests and licences.

- Consent-first voice/likeness policy: Require explicit written permission for voice or persona cloning and establish revenue-share terms.

- Creative co‑design labs: Fund partnerships where producers and AI engineers co-create models biased toward local style, with creators as stakeholders.

- Watermarking & content ID: Embed robust watermarks or metadata into AI‑generated audio so platforms can detect provenance and attribute revenue.

- Local monetisation channels: Tie AI tools to local distribution (digital sales, membership, merchandising) and commissioning programs for artists who co‑design assets.

Private innovation: KushLingo as an example of culturally grounded AI

What KushLingo offers (site data)

KushLingo presents an AI‑enabled language and culture learning product that emphasizes native speaker tutors, cultural mentoring, and AI‑powered visuals and pronunciation feedback. Site figures and product descriptions show broad scope: platform claims of 50,000+ learners, listing of 91+ African languages, 500+ active teachers, presence across 30+ countries, and an average tutor headline rate of $25+ per hour. The product bundles human mentors (KushMind mentors) with AI tools for pronunciation comparison, cultural capsules, and gamified learning.

Why this is relevant to creative ecosystems

KushLingo demonstrates how AI products can preserve intangible cultural assets while creating paid work for native speakers (heritage guides). This is a model of value capture: the technology amplifies demand for human expertise rather than replacing it. For music and film, similar marketplaces, voice mentors, dialect coaches, griot consultants can be built so AI outputs are grounded in human authenticity and paid expertise.

Putting it together — an engineering and governance blueprint

Data & model layer (what CTOs and builders must do)

- Dataset commons: fund and host consented corpora (text, transcribed dialogue, music stems, annotated visuals) with origin metadata and consent logs.

- Model cards & audit trails: every model must publish a card with training data summary, known biases, and license for reuse.

- Access tiers: offer sandboxed access for creatives, with commercial‑use licenses tied to revenue‑share and attribution terms.

Product & commercialization layer (what founders and CMOs must design)

- Monetization primitives: subscriptions, micro‑payments for premium samples, and co‑design commissions for local artists.

- Procurement pilots: public institutions should commit to buying pilot outputs (subtitles, educational content) from local startups to create demand signals.

- Creative marketplaces: platforms where artists can license stems, voices, or cultural motifs to commercial users with clear revenue splits.

Governance & ethics layer (what policymakers and legal teams must enable)

- IP & consent frameworks: model commercial clauses that protect contributors and require benefit‑sharing when public data is used.

- Transparency rules: mandate disclosure of AI use in promotional materials and credits where AI materially shaped creative output.

- Independent audits & community redress: create audit mechanisms and complaint channels for cultural misrepresentation and takedown procedures for harmful deepfakes.

Making the Ecosystem Profitable: Practical Steps for Governments, CTOs and Marketers

For governments & funders

- Create measurable KPIs (jobs created, startups commercialised, datasets published) and publish quarterly outcomes tied to public grants.

- Condition public grants on open governance: require model cards, dataset manifests and benefit-sharing clauses when public data or funds are used.

- Use procurement pilots (e.g., public broadcaster commissions) to create predictable revenue for local AI startups and creative partners.

For CTOs & platform leads

- Publish model cards and keep immutable prompt/provenance logs for high-risk creative outputs.

- Implement review workflows and role-based approvals in the CMS/Generation pipeline to ensure every public asset has human sign-off.

- Design monetisation integrations (subscription, micro‑payments, creator royalties) in platform roadmaps and API hooks for attribution metadata.

For senior marketers & brand leaders

- Use AI for velocity (thumbnails, promos, matchday content) but maintain brand disclosure and editorial oversight.

- Partner with local creatives for co‑branded AI drops, licensing revenue shares and limited-edition merchandise to keep value local.

- Measure beyond vanity metrics: track subscriptions, conversions and revenue per campaign to test commercial value.

Measuring Success: KPIs That Matter

Move beyond impressions. Useful KPIs for the sector:

- Creator revenue share captured and disbursed (monetised value that returns to local creators).

- Number of datasets published with ownership and consent.

- Startups graduating from initial grants to follow-on financing and procurement contracts.

- Jobs created in AI‑augmented creative roles (producers, prompt engineers, cultural reviewers).

- Sentiment ratio and escalation incidents per campaign (governance health indicator).

Practical Playbook: What to Do Tomorrow

- Publish a model card for any public model you release; include training data summary and licensing.

- Run a one-week pilot using AI for promo assets only; log model, prompts, and sign-offs.

- Convene a 4‑person cultural review board (local artists, legal, product, and community rep) to pre-approve guardrails.

- Negotiate at least one procurement pilot (broadcaster or municipality) to create a revenue runway for startups using local models.

- Create a revenue-share template for artists who contribute training data or co‑design assets.

The future we build with AI in African creativity will be remembered by the choices we make now: who keeps the copyright, who signs the pay cheque, and whose stories get framed by algorithms. Build systems that log sources, distribute value, and keep human judgment at the heart of release decisions, and you will not only preserve the soul of the art, you will expand the circle of who can create it. Let our inventions return songs to their singers, stories to their storytellers, and livelihoods to those who kept the music alive long before the first algorithm ever learned a beat.

Methodology & Sources

Research for this article used the specific case links provided below and synthesized them into practical analysis and recommendations. I did not invent facts beyond those contexts; recommendations are standard best practices informed by the cases curated by AI.

Documents, media, and domains consulted (explicit list)

- Federal Ministry of Communications, Innovation and Digital Economy (Nigeria) — NCAIR & Google AI Fund announcement: https://fmcide.gov.ng/ncair-collaborates-with-google-to-launch-ai-fund-towards-empowering-local-startups/

- NCAIR1 organization page on Hugging Face (open model): https://huggingface.co/NCAIR1

- GOtv Uganda X post (matchday AI image): https://x.com/GOtvUganda/status/2006005299279483026

- DStv Nigeria X response post: https://x.com/DStvNg/status/2006015067293163657

- BBC Future — coverage on India’s rapid adoption of AI in cinema: https://www.bbc.com/future/article/20251223-why-indian-cinema-is-awash-with-ai

- YouTube Music — AI artist example (Zahri track): https://music.youtube.com/watch?v=HiHqCUTx708

- Research references mentioned in our planning (ethics/governance): Governance of Generative AI in Creative Work — referenced as an arXiv research context (arxiv.org) used to frame worker/ethics considerations.

Comments

Sign in to join the conversation

Sign In