Beyond Granular Control: Why AI Marketing Demands a New Ethical Framework for Global Markets

The AI Marketing Paradox and the Imperative for Trust

The pervasive integration of Artificial Intelligence into marketing strategies has ushered in an era of unprecedented personalization, promising hyper-targeted campaigns and enhanced user engagement. Yet, this technological leap presents a profound paradox: as AI systems grow more sophisticated, the ethical challenges surrounding data privacy and user trust escalate dramatically. This article argues that prevailing granular control models for data privacy are fundamentally insufficient, particularly in global markets with low digital literacy. A new, localized, and more protective 'Ethical Framework for AI Marketing' is not merely a recommendation but an urgent imperative to ensure sustainable growth and safeguard vulnerable populations.

The Global Problem: The Flawed Promise of a Fine-Grained Control

The current paradigm of data privacy often relies on the concept of granular control, enabling users to manage their data preferences with precise settings. However, this model, while well-intentioned, frequently creates an illusion of agency, especially when confronted with the inherent complexities of modern AI systems. The very nature of advanced AI-powered marketing renders precise control elusive, exposing systemic weaknesses that demand a more robust ethical framework to address prevalent AI marketing ethical dilemmas and the creeping influence of surveillance capitalism marketing.

The Opacity of AI: When Algorithms Obscure Consent

The sophisticated, often 'black-box' nature of AI algorithms makes it exceedingly difficult for the average user to truly comprehend how their data is collected, processed, and leveraged for personalized advertising. This inherent opacity hinders genuine informed consent, rendering it a passive acceptance rather than an active choice. When users cannot fully understand the implications of their data sharing, the foundation of consent crumbles, revealing a significant digital literacy-informed consent gap that AI technology exacerbates.

The Digital Divide: Unequal Capacity for Control

Compounding the issue is the vast digital divide that exists across global markets. Varying levels of digital literacy, access to technology, and understanding of online privacy norms significantly compromise the effectiveness of existing control measures. In regions where internet access is nascent or digital education is limited, the expectation that users will meticulously manage complex privacy settings is unrealistic. This disparity highlights the data privacy issues developing countries face, demonstrating how a universal granular control approach is impractical and inequitable.

Case Study: Meta's AI Personalization – A Blueprint for Ethical Failure

To illustrate the shortcomings of current ethical approaches and the potential for AI-driven personalization exploitation risks, we examine Meta's recent strategic moves in AI-driven personalization. These initiatives serve as a compelling, real-world example of how even leading tech companies can inadvertently create a blueprint for ethical failure when prioritizing aggressive integration over robust, context-aware user protection.

Meta's AI Recommendations: The Push for Deeper Integration

Meta has aggressively moved to enhance recommendations across its Facebook and Instagram platforms, utilizing advanced AI, which signals a strategic push for deeper integration of user data. A particularly controversial aspect highlighted in recent news reports is the company's stated intention to utilize conversational data from its AI chatbots for targeted advertising. This represents a significant pivot towards more intimate data utilization, pushing the boundaries of traditional ad targeting and raising immediate ethical questions about the nature of user privacy.

The Insufficiency of Opt-Out and Keyword Guardrails

Critically, Meta's policy of not allowing users to opt out of these AI-driven recommendations, combined with its reliance on self-imposed 'guardrails' (such as blocking sensitive topics via keywords), demonstrates a flawed approach to ethical safeguards. While designed to prevent misuse, such keyword-based filters are easily bypassed by malicious actors. For example, a hypothetical child trafficker could promote a seemingly innocent, child-friendly corporate social event in Nigeria, leveraging Meta's ad platform to reach a broad, local audience. Then, by retargeting attendees of that event, they could craft hyper-personalized tourism or job offers that are, in reality, a front for a human trafficking scheme. This chilling scenario vividly illustrates how current corporate ethical safeguards are inadequate, failing to prevent AI personalization exploitation risks when sophisticated bad actors exploit proxy signals that keyword filters cannot detect, leaving vulnerable populations exposed.

The Amplified Risk: Hyper-Personalization's Peril in Underserved Markets

The ethical dilemmas and exploitation risks inherent in AI-powered personalization become exponentially greater and more damaging in low-digital-literacy, underserved markets. Regions like Nigeria and the broader African continent present unique vulnerabilities, where the promise of personalization can quickly devolve into peril, demanding a fundamental reevaluation of African tech policy, AI, and digital rights.

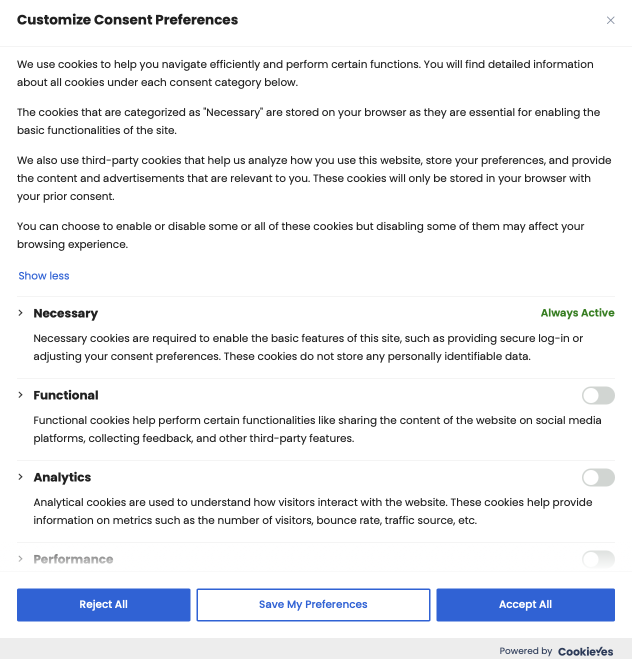

Implied Privacy: The Flawed Consent Landscape

In environments characterized by low digital literacy, the very notion of earned user trust through complex cookie banners and dense privacy policies is fundamentally flawed. Users often grant consent without a thorough understanding of the complex implications of data sharing and personalized advertising. This leads to a state of implied privacy rather than genuine, informed consent, where the user's perceived agency is an illusion. Global tech companies must grapple with how to ensure truly informed consent for AI-powered marketing in low-digital-literacy markets, recognizing that a simple checkbox does not equate to genuine understanding or agreement.

The Technical Gap: Reliance on Insecure Data Collection

A significant technical disparity further exacerbates risks in underserved markets. For instance, an estimated 85% of businesses in Nigeria are unable to implement privacy-centric, first-party data solutions, such as Meta's Conversions API (CAPI), due to a lack of infrastructure, skilled personnel, or financial resources. This technical gap leads to an over-reliance on less secure and privacy-invasive third-party data collection methods, such as the Meta Pixel. This dependence on less secure practices not only contributes to challenges in the AI marketing regulatory landscape but also dramatically increases the risks of AI personalization exploitation, as user data becomes more vulnerable to breaches and misuse.

Societal Harm: Beyond Commercial Damage

Beyond the general risks of brand damage and regulatory fines, the pursuit of hyper-personalized ad systems in environments with less robust societal and technical safeguards can be easily exploited for malign purposes, leading to severe non-commercial risks. This includes, but is not limited to, the deliberate spread of political manipulation through targeted misinformation campaigns, sophisticated scams designed to defraud vulnerable populations, or the predatory marketing of harmful products and services. Such exploitation can cause significant societal harm, exacerbate existing inequalities, and erode democratic processes, moving far beyond mere commercial risk.

The Proposed Solution: An Ethical Localization & Proactive Safeguards Framework

To navigate this complex landscape and harness the opportunities of AI while mitigating its profound risks, executive leaders must adopt a proactive, ethics-driven approach to AI-powered marketing. This requires a 'Trust-First Framework' that is not only robust but also context-aware, incorporating an 'Ethical Localization & Proactive Safeguards' framework specifically tailored to address the unique challenges of underserved markets. This framework outlines concrete principles for corporate digital responsibility strategies, aiming to build ethical AI marketing frameworks and trust-first marketing principles for AI. This approach directly answers how a 'Trust-First Framework' for AI marketing can be effectively localized and implemented to address unique challenges in African markets.

Shifting Responsibility: A Mandate for Global Tech Companies

Global tech companies bear a heightened responsibility for ethical and corporate digital practices to protect vulnerable users in underserved markets. Moving beyond a one-size-fits-all approach, platforms must acknowledge their outsized influence and the potential for harm in regions where users have less agency or understanding. This establishes a moral imperative for global platforms to adopt a more protective stance, necessitating the development of localized AI ethics guidelines that reflect regional realities.

Guiding Principle 1: Stricter Default Privacy Settings

Global tech companies must implement the most protective privacy settings as the default for users in regions with low digital literacy. This fundamental change shifts the burden of data protection from the individual, who may lack the understanding or tools to manage complex settings, to the platform provider. By making privacy the default, companies can ensure inherent protection for their most vulnerable users, rather than relying on opt-in mechanisms that often fail in these contexts.

Guiding Principle 2: Radically Simplified and Localized Consent Language

Moving beyond generic legalistic jargon, consent mechanisms must be radically simplified and localized. This involves developing consent prompts that utilize local dialects, culturally relevant metaphors, and simplified visual cues to ensure genuine and informed consent. This principle directly addresses the digital literacy informed consent gap, making consent truly understandable and meaningful for all users, regardless of their linguistic or cultural background.

Guiding Principle 3: Proactive Threat Modeling and Mitigation

It is imperative to establish and regularly perform region-specific threat modeling to anticipate and prevent the exploitation of hyper-personalization systems for malign purposes. This includes identifying potential vectors for misinformation, smoke-screen campaigns, financial scams, or other forms of digital harm that are prevalent and particularly impactful in low-digital-literacy environments. This pre-emptive approach is crucial where societal and technical safeguards may be less developed, aiming to address global AI governance policy gaps in vulnerable regions.

Conclusion: Building a New Era of Trust in AI Marketing

The rapid evolution of AI-powered marketing demands a paradigm shift in how we approach data privacy and user trust, especially in a globally interconnected yet unevenly digitally literate world. The limitations of existing granular control measures are starkly evident, particularly in underserved markets where the risks of exploitation are amplified. This article has presented a compelling case for an ethically localized AI marketing framework, emphasizing radical transparency, meaningful user control, ethical data sourcing, and proactive safeguards. Industry leaders, policymakers, and consumers must collectively commit to corporate digital responsibility, fostering a future where AI marketing genuinely serves users while upholding the highest standards of ethics and global market practices in AI marketing. Only through such a concerted effort can we build a new era of trust in AI marketing, ensuring that technology empowers rather than exploits, and that innovation is always tethered to ethical responsibility.

Comments

Sign in to join the conversation

Sign In